EY refers to the global organization, and may refer to one or more, of the member firms of Ernst & Young Global Limited, each of which is a separate legal entity. Ernst & Young Global Limited, a UK company limited by guarantee, does not provide services to clients.

Quantum technologies promise to revolutionise business. But what questions need to be answered to ensure that they can be trusted?

In brief

- Business and technology leaders cannot risk waiting for quantum technologies and their use cases to mature before enabling quantum ethics.

- A successful transition to quantum will require existing cyber, data and AI governance capabilities to be expanded upon — but not replaced.

- Quantum technologies will magnify current organisational risks, with a particular focus on where quantum computing intersects with AI.

Quantum technologies have the potential to create opportunities for organisations in diverse sectors, whether expediting drug discovery, helping fight climate change, or optimising supply chains. However, quantum technologies also have the potential to present new business challenges and aggravate ongoing technology risks, particularly in the way that they will intersect with and augment other technologies, such as artificial intelligence (AI). In the past, societies have made the mistake of activating ethical dialogue and action only after technology oversights have occurred. With consumers — and regulators — now demanding greater trust in technology, the case for digital ethics has never been clearer.

Research from the University of Oxford notes that there is a “window during which technologies can be interrogated on questions around societal impact” before such technologies achieve everyday, practical use.¹ For quantum, our window is already open. Whilst still an emerging set of technologies, the projected disruption that could be caused by quantum across multiple sectors merits early and thoughtful action.

By acting now, business leaders can create responsible strategies and road maps, and develop a trusted quantum ecosystem that is socially and environmentally sustainable by design. We are also calling on regulators to begin to identify standards and enable oversight over quantum technologies to help businesses practice Responsible Research and Innovation (RRI), that is, innovation that is “socially desirable and … in the public interest.”²

With greater demands for trust in technology, the case for digital ethics has

never been clearer.

In the following Quantum Intersection article, we set out the key ethical questions that businesses should be asking regarding quantum technologies and present three high-level behaviours that business leaders can adopt today to enable an ethics-first mindset towards quantum development: be proactive, proportional and practical.

Chapter 1

Security goes quantum

Is your data secured against the threat from future technological advances in quantum computing?

One of the key concerns associated with the widespread use of quantum computers is that it may make 2048-bit Rivest-Shamir-Adleman (RSA) cryptography and other encryption standards obsolete. If this occurs, it would present serious data privacy and fraud risks to companies, governments, and consumers.³ Hypothetically, quantum computers with sufficient algorithmic quantum bits (qubits) — known as cryptographically relevant quantum computers (CRQCs) — will be able to efficiently factorise integers using the well-known Shor’s algorithm, therefore, breaking the existing encryption protocols.

Even the most powerful of today’s supercomputers do not have the means to achieve the same feat.⁴; ⁵Although CRQCs have not yet achieved the requisite level of computational maturity due to ongoing engineering challenges, actors who do manage to access a CRQC in the future will be able to decrypt encrypted data, tamper with digital signatures and impersonate individuals. As suggested by the UK’s National Cyber Security Centre (NCSC), this is a pressing concern for everyone, because adversaries may be capturing data now with the intention of decrypting it later.⁶

Organisations need to consider this underlying security risk to prevent costly data breaches and uphold client trust. Therefore, technology leaders may need to develop an approach for maintaining greater cryptographic agility. Two primary quantum-secure solutions that could help are post-quantum cryptography — which involves updating encryption techniques to make it harder for both classical and quantum computers to decrypt private information — and quantum-safe cryptography — which leverages properties of quantum mechanics to enable security via quantum key distribution (QKD).⁷

Leadership will have to work with their technology and transformation teams to understand the enhanced and latent risks of breaches, and to prioritise new quantum-secure methods of data sharing and communication within external products and core operations.

Chapter 2

Adopting quantum cloud-based services

How can you prepare for a transition to the quantum cloud?

As the quantum ecosystem — the community of academics, start-ups and established technology firms — grows and matures, so do the options for organisations to process their data in the cloud. Quantum cloud computing should democratise the market by enabling individuals and businesses to gain access to exceptional processing power, without having to build and maintain their own quantum-computing infrastructure. Quantum cloud services will be especially important in the next three to five years, because they will permit experimentation before quantum computers become more widely available.

In some cases, traditional providers of classical cloud-computing resources may be the same as future providers of quantum cloud computing. However, during experimentation, piloting and early development of quantum use cases, the ecosystem may remain diverse and fractured. The development of ‘full stack’ quantum computing capabilities could take some time, as companies and start-ups focus on hardware and software elements separately. This is why it is important for technology leaders to clarify what data could be shared across various organisational boundaries, what data is shared and how it occurs.

Just as with existing classical cloud services, careful consideration must be given to the location of quantum cloud providers, as well as their servers and processors, as data transfers to and between different jurisdictions may give rise to unforeseen legal obligations or poor consumer protections.⁸

Furthermore, the hype around quantum and its underlying complexity make it more difficult to critically assess supplier risk practices and avoid entering partnerships that pose reputational risk. For example, the cost of quantum cloud services promises to be initially more expensive than its classical counterpart due to costs associated with developing and maintaining quantum hardware.⁹ Businesses may feel pressured to engage newer, lower-cost vendors with whom they have little to no experience to stay relevant in the quantum space. Some vendors — seeking to capitalise on the excitement around quantum computing — may have insufficient data governance standards or even be engaged in work that is contrary to your company’s mission and values.

Public knowledge of such a partnership can damage consumer trust and — in some cases — lead to financial penalties. Clarity and awareness of data governance policies and protocols, as well as methodical due diligence checks on quantum service providers, will be essential in enabling maximum flexibility to evaluate quantum opportunities, whilst minimising risk and encouraging RRI.

Chapter 3

Emerging technologies, emerging ethical risks

How will you manage the enhanced ethical risks associated with quantum technologies?

In certain applications, quantum computing has the potential to process staggering quantities of data faster than ever before. This potential has made AI and machine learning highly desirable and anticipated applications of quantum computing. With an ability to train models on vast amounts of multidimensional data at speed, we may unlock the ability to generate more powerful models and deliver better predictions. However, whilst exciting, the prospect of quantum machine learning raises similar ethical concerns to those posed by traditional machine learning on classical computers, such as algorithmic bias, poor explainability and inappropriate use cases.

In the Global Risks Report 2021, the World Economic Forum identifies the possible adverse outcomes of quantum computing on individuals, businesses’ ecosystems and economies as one of its key technological risks.¹⁰ Without early intervention, quantum-enabled AI stands to accelerate digital ethics pitfalls and make them substantially more complex.

In the absence of quantum ethics, quantum-enabled AI stands to accelerate

ongoing digital ethics pitfalls and make them substantially more complex.

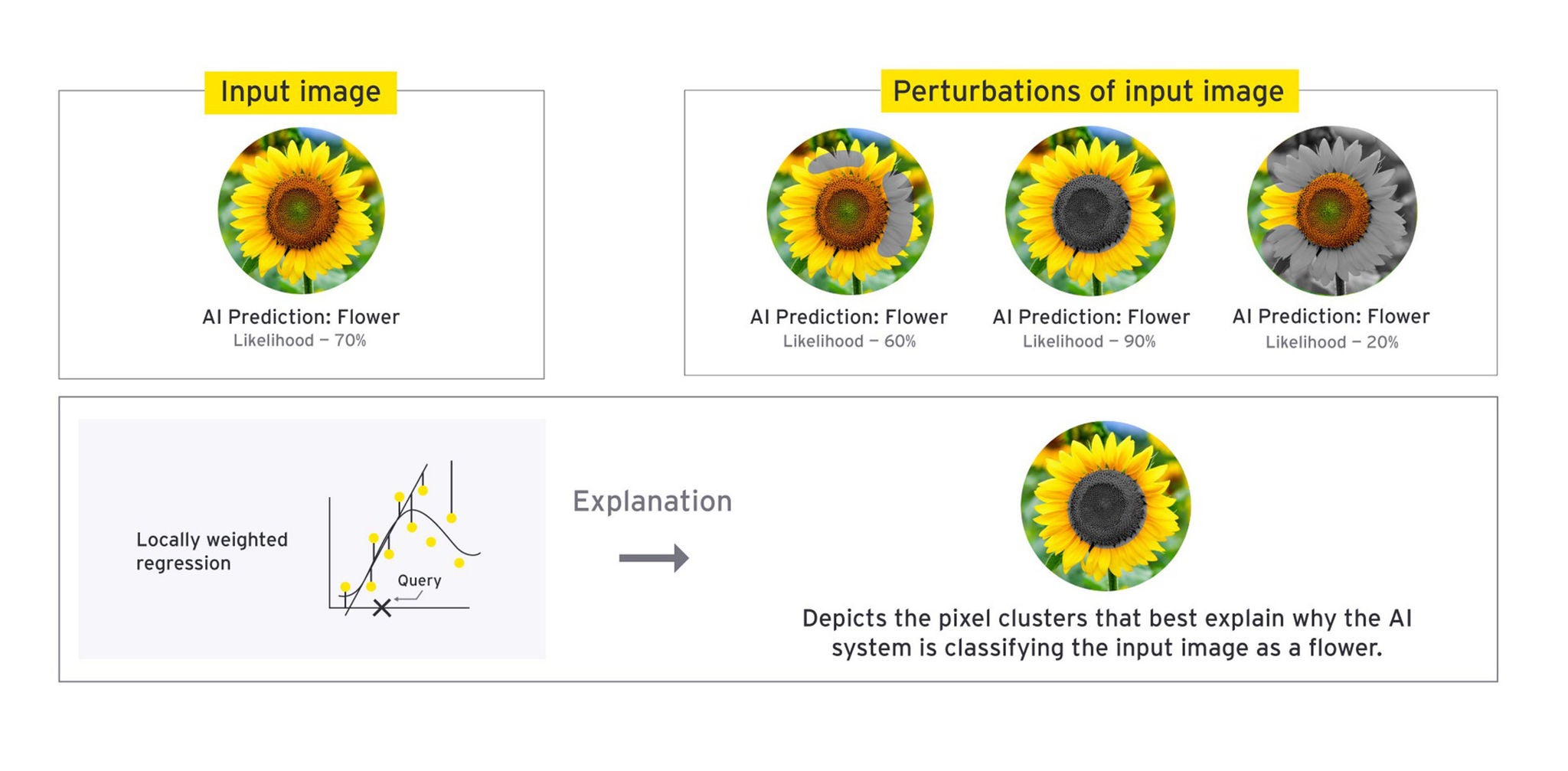

Let us consider explainability — also called interpretability — which refers to how well a person can understand how an AI system has generated a prediction, decision, or output and the subsequent communication of this explanation to an end user, or system designer.¹¹ Explainable AI can improve trust in AI systems by providing pathways for humans to understand the internal logic of an algorithm, recognise instances of discrimination or bias and inform system accountability.

Some AI use cases demand explainability, such as a machine learning tool that is used to detect cancer from medical imaging data, or one that is used to automate loan decisions. In addition to generating an output — the likelihood of cancer or the creditworthiness of a client — we also need to understand what factors contribute to this output to enable informed, auditable decisions. If system designers have used only black-box techniques in the development of such systems, then they may not be able to explain to users, regulators, broader society, or even themselves the factors that have contributed to AI output. In addition to posing potential reputational damage, early efforts by governments around the world to regulate AI — for example, via the EU Artificial Intelligence Act — may expose companies to economic and legal risks in the future if considerations, such as explainability, are not acknowledged in the design, development and maintenance of AI systems.¹⁵

Although enabling explainable AI in a classical-computing environment is not easy, achieving explainable quantum-AI models could be even more difficult. Initial research has noted that the underlying physical properties of quantum computers (for example, superposition and entanglement) make it impossible to audit quantum computations directly, or replicate them with classical computers.¹⁶ This facet of quantum computing means that AI use cases that require an explanation may not be appropriate applications for quantum computing — at least, until supplementary quantum explanation tools are developed, widely available and credible.

Similarly, there is reason to believe that some fairness metrics and techniques used in traditional machine learning may no longer be applicable after a quantum transition due to the opaque nature of quantum states.¹⁷; ¹⁸Increased research attention and investment into the intersections of fairness, explainability, and quantum are required to get a comprehensive view of the limitations of quantum machine learning and safeguard communities against algorithmic harms.

Boards, governments and consumers are more concerned, and aware of the risks associated with poorly designed and mismanaged technology than in previous decades.¹⁹ To meet the increasing societal demands for trustworthy technology, the future of quantum technologies should be informed by lessons learned from previous digital ethics failures (for example, from instances where individuals’ data was misappropriated or misused, where algorithms were employed to amplify misinformation and hateful content online, and where marginalised groups faced added discrimination through the use of unrepresentative data sets and unfair models).

Business leaders will need to liaise with their technology teams and engage in rigorous ethical risk assessments to ensure that quantum machine learning and broader quantum road maps are feasible and proportionate to business needs. Furthermore, ongoing research, and collaborations with the fields of digital ethics and RRI, are necessary to understand better the technical and non-technical ways that quantum can align with existing ethical norms and principles. Companies, such as Google, have already recognised the synergies of AI ethics and quantum computing ethics — having announced in 2019 that its AI-ethics principles will be applied to their quantum programme to mitigate misuse.²⁰ All leadership teams will need to boost their focus on ethics and trust, which will mean ensuring that approaches to data selection, modelling, delivery, and monitoring are enhanced and subject to additional scrutiny.

Finally, the adage ‘just because we can does not mean we should’ rings true when constructing ethical guidance fit for the quantum era. Quantum technologies — once sufficiently mature — may enable previously unfeasible or intractable processes to be enacted with relative ease. However, in unlocking new computational frontiers, there is no guarantee that quantum technologies will only be used in the best interests of society and the planet. For example, there is a concern that actors may develop materials that support unethical practices, such as the creation of cheaper — but more environmentally harmful chemicals — by harnessing quantum’s augmented ability to simulate molecules. The same concern is present for applications of quantum computing towards combinatorial optimisation problems, if individuals elect to optimise solely for cost savings to the detriment of social or environmental good.

Whilst this article has primarily focused on the possible downstream implications of quantum computing, other quantum technologies — in areas ranging from sensing to communication — must also be subjected to rigorous ethical scrutiny to ensure that projects are being initiated and maintained in accordance with the aims of RRI. For example, let us consider quantum sensors, which leverage quantum mechanical properties to obtain more sensitive, holistic and accurate measurements. Such sensors could provide us with richer data required to optimise traffic or detect diseases. However, they may also provide actors with even greater means to engage in fine-grained, covert surveillance. If applied unethically, quantum-sensing technologies could be used to compromise individuals’ privacy and — in more extreme cases — suppress dissent and degrade autonomy.

Cross-sector and cross-disciplinary collaboration will be essential to establishing clear limits on what constitutes ethical and unethical use cases for quantum technologies.

Business leaders, academics, regulators, governments, and civil society organisations must work together to set out clear limits on what constitutes ethical and unethical use cases for quantum technologies. As environmental stewardship becomes increasingly important for communities contending with the worsening effects of climate change, companies must consider the intersection between quantum and sustainability. This includes efforts to minimise the environmental footprint of our broader computing ecosystem, as well as the activation of opportunities for quantum technologies to fight climate change and contribute to social good, such as enabling the discovery of new materials that facilitate carbon capture.

Chapter 4

Three key behaviours for quantum innovators

To achieve an ethics-first mindset, you need to be proactive, proportional and practical.

Once quantum technologies have matured, it will be too late to prevent all possible harms. It is for this reason that we encourage organisations to adopt an ethics-first mindset towards quantum development — an operating approach that continuously embeds RRI into technology life cycles. However, it can be difficult for business leaders to understand what an ethics-first mindset means for their day-to-day activities. To make it easy, we have identified three high-level behaviours to embody in your quantum journey that will allow you to manifest this mindset and forge an ethical future for these technologies.

Be proactive

To be sure of enabling an ethical future for the quantum ecosystem, business and technology leaders need to act now. In the past, opportunities were missed to develop ethical guidelines as new technologies were emerging. This has allowed significant issues to materialise, from racist facial recognition algorithms to discriminatory curriculum vitae-screening tools. A great deal of societal harm, reputational damage, and lost opportunity costs associated with big data and AI may have been mitigated had organisations collectively adopted an ethics-first mindset.²¹ Business leaders owe it to their clients, colleagues and broader society to adopt a more proactive position with quantum.

Being proactive can take many forms. Chief technology officers can start by having discussions with senior leadership and technology teams about how quantum technologies might fit into the organisation’s long-term strategy, and road map. Similarly, teams can adapt and refine existing governance policies and processes for cybersecurity, data, and AI, or develop new ones as preparation for a quantum transition. For example, your business could carry out an internal ethical-AI audit to ensure that any work on quantum is built upon a robust, underlying governance infrastructure and culture. To further enhance these efforts, chief risk officers should create a new registry to ensure centralised tracking of quantum risks, as the technologies mature.

Human resources (HR) leaders can also develop a plan of how to attract, retain and upskill quantum talent, which includes those well-versed in the technical aspects of quantum mechanics, as well as individuals with socio-technical expertise in the ethics of emerging technologies. Finally, businesses can stay engaged with broader industry and academic communities on issues related to quantum governance. Conversations on this front are already happening, as evidenced by the World Economic Forum’s recent publication on the topic.²²

Be proportional

Too often, it seems that companies, and public sector bodies pursue disruptive technology for technology’s sake without fully accounting for the associated economic, operational and reputational risks. This can lead to situations where investment in a technical solution is not always merited, as a non-technical solution may provide a simpler, more cost-effective way to satisfy business requirements. In addition, it may lead to situations where a technical solution is used as an inadequate substitute for remediating broader, systemic injustices.

A frequently cited example of this is COMPAS — an AI-enabled risk assessment tool that was adopted by several US courts to predict the likelihood of recidivism to guide judicial decision-making (for example, pre-trial releases and sentencing). As reported by ProPublica, the tool and process through which COMPAS was implemented was flawed. It disproportionately ranked African American individuals that did not reoffend as high-risk for recidivism, when compared with their white counterparts and, therefore, contributed to marginalisation already facing the black community in the American criminal justice system.²³

The supposed idea behind COMPAS’ adoption was that it would improve the quality of judicial decisions made by courts. However, the solution was not proportional to the problem. Courts adopted a technical solution as a catchall to a nontechnical problem and ultimately, generated the very unfairness that they sought to avoid.

The winners of quantum disruption will be those that innovate proportionately to business problems.

Like with AI, to plan effectively for the quantum era, businesses will need to be realistic about where quantum technologies are likely to provide strategic advantage. With respect to encryption, whilst we are not yet able to implement Shor’s algorithm, there is some evidence that quantum computers will be substantially better at prime factorisation.²⁴ Similarly, there is some proof that quantum computers will be substantially better at molecular simulation for materials science.²⁵ However, we will need to wait for further developments to occur to prove that quantum algorithms will provide a definitive advantage over existing classical alternatives.²⁶

Just as some problems do not necessitate the development of an AI system, not all business problems will be suited to quantum — whether for ethical reasons or for mere simplicity. The winners of quantum disruption will be those that innovate proportionately to business problems.

Be practical

When conversations surrounding the ethics of emerging technologies arise in business contexts, so too do concerns about the so-called ‘ethics washing’, which the MIT Technology Review defines as instances “where genuine action gets replaced by superficial promises.”²⁷ We have seen ethics-washing happen during the rise of AI, where companies announced commitments to broad ethical principles without connecting them to tangible actions to improve AI development and governance.

Ethics are not public relations tools to increase the credibility of a company — though this may be a side effect of a sincere and robust engagement with ethics. Instead, they are long-lasting commitments that connect to moral obligations to act in the best interest of society. Without genuine reflection, honesty and an increased allocation of resources towards technology governance, efforts to establish quantum ethics and associated governance guidelines may risk being labelled as performative, and degrade trust in your business.

Quantum ethics cannot merely be stated. They must be continuously

operationalised.

In your quantum journey, consider what existing values are embedded into your organisation — for example, fairness and transparency — and ensure that they are also embodied in your quantum strategies through practical, actionable steps. Quantum ethics cannot merely be stated. They must be continuously operationalised. The principles underlying your business and existing commitments to ethics, are where your quantum strategy should begin, not end.

Chapter 5

Plan for disruption by using an ethics-first mindset

You can no longer afford for ethics to be an afterthought.

At this early stage in quantum’s maturation, we are poised to establish quantum ethics that will enable the responsible use of quantum technologies. By asking the key questions outlined above, as well as embodying an ethics-first mindset by being proactive, proportional and practical, business and technology leaders will be well-positioned for a successful quantum transition, when the time comes. Bringing ethics into conversations about disruptive technologies, before disruption occurs can help organisations establish an informed foundation for future consumer trust, acquire more holistic oversight over current and future risks, and ultimately, enable the development of technology that is aligned with societal needs and values.

Summary

We know that a disruptive technology’s impact on society depends largely on how, and when, organisations and societies choose to govern it. We also know that delaying ethical dialogue, and the practical implementation of technology ethics, can have serious and lasting consequences for organisations and communities alike. Quantum technologies promise to accelerate intelligence opportunities across sectors. It is now time for business and technology leaders to commit to making this intelligence trusted before the opportunity closes.

How EY can help

-

Discover how EY's technology transformation team can help your business fully align technology to your overall purpose and business objectives.

Read more -

Our Strategy Consulting teams help CEOs achieve maximum value for stakeholders by designing strategies that improve profitability and long-term value.

Read more -

Our Consulting approach to the adoption of AI and intelligent automation is human-centered, pragmatic, outcomes-focused and ethical.

Read more -

Our Sustainability and ESG Strategy Consulting teams can help you with strategy, M&A, capital allocation, ESG due diligence and portfolio optimization.

Read more -

EY's analytics consulting services utilizes the latest technology to integrate data and analytics throughout your business process and operation.

Read more -

Discover how EY's technology solution delivery team can support your organization as it initiates or undergoes major transformation.

Read more -

Discover how EY's digital transformation services can help your business evolve quickly to seize opportunities and mitigate risks. Find out more.

Read more

Related articles

Three steps business leaders can take to get ‘quantum ready’

We explore the significance of quantum computing for business leaders, and the practical steps they can take to harness the opportunity. Learn more.